Hattie uses the REDUCTIONIST approach by attempting to break down the complexity of teaching into simple discrete categories or influences.

Although, Nick Rose has alerted me to another form of reductionism defined by Daniel Dennett - 'Greedy Reductionism' which occurs when,

"in their eagerness for a bargain, in their zeal to explain too much too fast, scientists and philosophers ... underestimate the complexities, trying to skip whole layers or levels of theory in their rush to fasten everything securely and neatly to the foundation."I think this latter definition better describes Hattie's methodology.

Allerup (2015) on 'Hattie's use of effect size as the ranking of educational efforts',

"it is well known that analyses in the educational world often require the involvement of more dimensional (multivariate) analyses" (p. 8).Hattie stated:

"The model I will present ... may well be speculative, but it aims to provide high levels of explanation for the many influences on student achievement as well as offer a platform to compare these influences in a meaningful way... I must emphasise that these ideas are clearly speculative" (p. 4).Hattie uses the Effect Size (d) statistic to interpret, compare and rank educational influences.

The effect size is supposed to measure the change in student achievement; a controversial topic in and of itself (there are many totally different concepts of what achievement is).

Also, Hattie claims the studies used were of robust experimental design (VL, p. 8). However, a number of peer reviews have shown that he used studies with the much poorer design of simple correlation, which he then converts into an effect size (often incorrectly! see -Wecker et al (2016, p. 27)). Hattie then ranks these effect sizes from largest to smallest.

The disparate measures of student achievement lead to the classic problem of comparing apples to oranges and has caused many scholars to question the the validity and reliability of Hattie's effect sizes and rankings, e.g., Wecker et al (2016),

Bruce Springsteen inducts U2 in the hall of fame:

I think it highly likely teaching is also the sum of its parts!

Prof John O'Neill (2012b, p. 5) agrees,

Contradictions & Inconsistencies:"The reconstruction of Hattie's approach in detail using examples thus shows that the methodological standards to be applied are violated at all levels of the analysis. As some of the examples given here show, Hattie's values are sometimes many times too high or low. In order to be able to estimate the impact of these deficiencies on the analysis results, the full analyzes would have to be carried out correctly, but for which, as already stated, often necessary information is missing. However, the amount and scope of these shortcomings alone give cause for justified doubts about the resilience of Hattie's results" (p. 31).

"the methodological claims arising from Hattie's approach, and the overall appropriateness of this approach suggest a fairly clear conclusion: a large proportion of the findings are subject to reasonable doubt" (p. 35).The Problem of Breaking Down the Complexity of Teaching into Simple Categories, Influences or 'Buckets':

"The partitioning of teaching into smallest measurable units, a piecemeal articulation of how to improve student learning, is not too removed from the work of Taylor over 100 years ago. Despite its voluminous and fast expanding literatures, educational administration remains rooted to the same problems of last century." Eacott (2017, p. 10).Eacott (2018) goes further,

"using a piecemeal reduction in tasks to their smallest components as Taylor did – and the execution of that practice with the greatest impact. Herein is the difference between Hattie’s work and other reform work in Australia in the previous decades. While initiatives such as Productive Pedagogies and Quality Teaching sought to provide teachers and school leaders with resources to reflect on and develop their craft, Hattie’s list of practices by effect size tells educators what to do to get maximum return. The difference is subtle, but important.

Hattie seeks to tell teachers what to do on the basis of what is presented as robust scientific evidence (irrespective of its critiques and failure to systemically refute counter claims).

To that end, the proliferation of brand Hattie throughout the Australian education system is akin to the spread of Taylorism across the U.S.A. school system in the early twentieth century and therefore consistent with Callahan’s claim of a tragedy. This is not to deny the absence of critique, but the at-scale adoption of professional associations (e.g. through keynotes, on-selling of books/products and promotion of workshops), policymakers (e.g. mobilizing the language of ‘Hattie says’ or ‘research from Hattie says’), and school systems (e.g. funding professional learning based on Visible Learning, and the identification of Hattie schools) is evidence of a largely uncritical adoption of the work when no dissenters or counter arguments are included" (p. 6).McKnight & Whitburn (2018) comment,

"Visible Learning, despite all its complicated effect sizes, equations and figures, is ultimately too simple a mantra. To assume that teachers can see what students see is both ableist and sexist (predicated on masculinist vision), and also arrogant. To reduce teaching to what works is to misunderstand it. To define growth only as getting “to the next level” (Hattie, 2017) is to narrow the potential meanings of success" (p. 17).Willingham in his influential book "Why Don't Students Like School", reveals this problem from a different angle,

"The gap between research and practice is understandable. When cognitive scientists study the mind, they intentionally isolate mental processes (for example, learning or attention) in the laboratory in order to make them easier to study. But mental processes are not isolated in the classroom. They all operate simultaneously, and they often interact in difficult-to-predict ways. To provide an obvious example, laboratory studies show that repetition helps learning, but any teacher knows that you can’t take that finding and pop it into a classroom by, for example, having students repeat long-division problems until they’ve mastered the process.

Repetition is good for learning but terrible for motivation."The Sum of the Parts?

Bruce Springsteen inducts U2 in the hall of fame:

'Uno, dos, tres, catorce. That translates as

I think it highly likely teaching is also the sum of its parts!

Prof John O'Neill (2012b, p. 5) agrees,

"real classrooms are all about interactions among variables, and their effects. The author implicitly recognises this when he states that ‘a review of non-meta-analytic studies could lead to a richer and more nuanced statement of the evidence’ (p. 255). Similarly, he acknowledges that when different teaching methods or strategies are used together their combined effects may be much greater than their comparatively small effect measured in isolation" (p. 245).Terry Wrigley (2018) in his critique of Hattie and the EEF,

"These forces and structures sit in various strata of reality, and through their energy, interactions and engagements with the environment, new possibilities of emergence arise: unlike the multiple ‘effect sizes’ of meta-meta-analysis, the sum can be more than the parts and qualitative change can arise" (p. 373).

Hattie defines 'influence' as any effect on student achievement. But this is too vague and leads to many contradictions & inconsistencies. Hattie states (preface)

"It is not a book about classroom life, and does not speak to the nuances and details of what happens within classrooms."However, his influences consist of a large number classroom nuances: behaviour, feedback, motivation, ability grouping, worked examples, problem-solving, micro teaching, teacher-student relationships, direct instruction, vocabulary programs, concept mapping, peer tutoring, play programs, time on task, simulations, calculators, computer-assisted instruction, etc.

"It is not a book about what cannot be influenced in schools - thus critical discussions about class, poverty, resources in families, health in families, and nutrition are not included."

Yet he has included these in his rankings:

Home environment, d = 0.57 rank 31

Socioeconomic status, d = 0.57 rank 32

Preterm birth weight, d = 0.54 rank 38

Parental involvement, d = 0.51 rank 45

Drugs, d = 0.33 rank 81

Positive view of own ethnicity, d = 0.32 rank 82

Family structure, d = 0.17 rank 113

Diet, d = 0.12 rank 123

Welfare policies, d = -0.12 rank 135

In Hattie's 2012 update of VL he contradicts his 2009 preface,

Hattie used his own research, Bond Smith Baker, & Hattie, (2000), on 65 teachers comparing NBC with Non-NBC teachers and reports this in the last chapter of VL. But, Hattie used the research for a very different purpose, to demonstrate the difference between expert versus experienced teachers. Hattie makes the arbitrary judgement that NBC certified teachers are 'Experienced Experts' while Non-NBC teachers are 'Experienced'. He does not use student achievement but rather arbitrary criteria as displayed in the graph below.

Podgursky (2001) in his critique, describes them as 'nebulous standards' and is also rather suspicious of Hattie's rationale for not using student achievement,

Harris and Sass (2009) report that the National Board for Professional Teaching Standards (NBPTS) who administer the NBC generate around $600 million in fees each year (p. 4). Harris and Sass's much larger study 'covering the universe of teachers and students in Florida for a four -year span' (p. 1) contradict Hattie's conclusion, 'we find relatively little support for NBC as a signal of teacher effectiveness' (p. 25).

Home environment, d = 0.57 rank 31

Socioeconomic status, d = 0.57 rank 32

Preterm birth weight, d = 0.54 rank 38

Parental involvement, d = 0.51 rank 45

Drugs, d = 0.33 rank 81

Positive view of own ethnicity, d = 0.32 rank 82

Family structure, d = 0.17 rank 113

Diet, d = 0.12 rank 123

Welfare policies, d = -0.12 rank 135

In Hattie's 2012 update of VL he contradicts his 2009 preface,

"I could have written a book about school leaders, about society influences, about policies – and all are worthwhile – but my most immediate attention is more related to teachers and students: the daily life of teachers in preparing, starting, conducting, and evaluating lessons, and the daily life of students involved in learning" (preface).Blichfeldt (2011) also discusses these contradictions,

"Possible interaction effects between variables such as subjects, age, class, economy, family resources, health and nutrition are not part of the study, and the variables work anyway narrowly represented."Hattie also promotes Bereiter’s model of learning,

"Knowledge building includes thinking of alternatives, thinking of criticisms, proposing experimental tests, deriving one object from another, proposing a problem, proposing a solution, and criticising the solution' (VL, p. 27).

"There needs to be a major shift, therefore, from an over-reliance on surface information (the first world) and a misplaced assumption that the goal of education is deep understanding or development of thinking skills (the second world), towards a balance of surface and deep learning leading to students more successfully constructing defensible theories of knowing and reality (the third world)" (p. 28).Prof Jérôme Proulx (2017), outlines the contradiction,

"ironically, Hattie self-criticizes implicitly if we rely on his book beginning affirmations, then that it affirms the importance of the three types learning in education...

So with this comment, Hattie discredits his own work on which it bases itself to decide on what represents the good ways to teach. Indeed, since the studies he has synthesized to draw his conclusions are not going in the sense of what he himself says represent a good teaching, how can he rely on it to draw conclusions about the teaching itself?"Nilholm (2017) also notices this contradiction,

"Early in his book, he points out that the school has a broad assignment, but then he builds a model for teaching and learning that deals only with the knowledge mission (or rather on how knowledge performance can be improved)" (p. 3).Teacher Training and Experienced Teachers:

Hattie used his own research, Bond Smith Baker, & Hattie, (2000), on 65 teachers comparing NBC with Non-NBC teachers and reports this in the last chapter of VL. But, Hattie used the research for a very different purpose, to demonstrate the difference between expert versus experienced teachers. Hattie makes the arbitrary judgement that NBC certified teachers are 'Experienced Experts' while Non-NBC teachers are 'Experienced'. He does not use student achievement but rather arbitrary criteria as displayed in the graph below.

Podgursky (2001) in his critique, describes them as 'nebulous standards' and is also rather suspicious of Hattie's rationale for not using student achievement,

"It is not too much of an exaggeration to state that such measures have been cited as a cause of all of the nation’s considerable problems in educating our youth. . . . It is in their uses as measures of individual teacher effectiveness and quality that such measures are particularly inappropriate" (Bond et al., 2000, p. 2).Hattie concluded that expert teachers (NBC) outperform Non-NBC teachers on almost every criterion (VL, p. 260).

Harris and Sass (2009) report that the National Board for Professional Teaching Standards (NBPTS) who administer the NBC generate around $600 million in fees each year (p. 4). Harris and Sass's much larger study 'covering the universe of teachers and students in Florida for a four -year span' (p. 1) contradict Hattie's conclusion, 'we find relatively little support for NBC as a signal of teacher effectiveness' (p. 25).

It is interesting that much of Hattie's consulting work to schools involves measuring teachers on the arbitrary categories listed on the graph, a significant omission is Teacher Subject Knowledge.

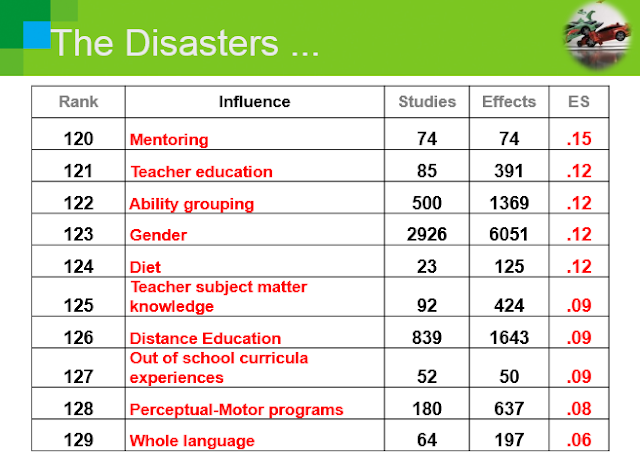

Yet, using the same type of research, e.g. Hacke (2010), comparing NBC with Non-NBC teachers, he uses the low effect size to conclude that Teacher Education is a DISASTER. See Hattie's slides from his 2008 Nuthall lecture,

'Garbage in, Gospel out' Dr Gary Smith (2014)

What has often been missed is that Hattie prefaced his book with significant doubt

"I must emphasise these are clearly speculative" (VL, p. 4).Yet, his rankings have taken on 'gospel' status due to: the major promotion by politicians, administrators and principals (it's in their interest, e.g. class size), very little contesting by teachers (they don't have the time, or who is going to challenge the principal?) and limited access to scholarly critiques.

Probability Statements?

The Common Language Effect Size (CLE) is a probability statistic which usually interprets the effect size. However, 3 peer reviews showed that Hattie calculated all CLE's incorrectly (he calculated probabilities that were negative and greater than 1!). As a result, he now claims the CLE statistic is not important and he focuses on an interpretation that an effect size d = 0.4 is the hinge point, claiming this is equivalent to a year’s progress. Although, there are significant problems with this interpretation.

Although recently Hattie seems to have another highly doubtful interpretation of probability. In an interview with Knudsen (2017) Hattie states,

"The research studies in VL offer probability statements – there are higher probabilities of success when implementing the influences nearer the top than bottom of the chart" (p. 7).

'Materialists and madmen never have doubts' G. K. Chesterton

PEDAGOGY:

Larsen (2014)

"future expectations that Hattie evidence-based credos and meta-studies can solve all the problems in learning institutions forget that not very many decades ago the absolute buzz-words (at least in major parts of Denmark and Germany) were "experiential learning," "experiential pedagogy," "critical reform pedagogy," and "pedagogy of resistance." Why do we ‘forget’ to talk with dignity and curiosity about the teachers’ and students’ experience (i.e. Erfahrung in German, erfaring in Danish), meaning "elaborated experience," a differentiation one cannot make in English? What has become of the enlightened citizen? Why and how is evidence ‘imperialising’ the right to define without any attempt to inherit and renew the past’s educational vocabulary?

We have come to live in a time without profound historical awareness in which we must acknowledge that Hattie, as the world’s most influential and successful educational thinker, does not contribute to the renewal of the pedagogical vocabulary" (p. 5).Wrigley (2018),

"The problem comes from an inflated and generalised role for statistical studies, a lack of awareness and self-awareness, and the omissions and linearities that arise in order to create an aura of science, order and regularity. The attempt to make learning visible (as Hattie puts it) eclipses older understandings of education as Bildung and pedagogy (both words carrying the sense of human formation). It serves to make invisible the deep aims of education, in terms of what kind of human beings we are forming and what kind of future we hope for" (p. 374).

Wrigley (2018) details a good example of confounding variables,

"One of the lowest-rated categories in the Toolkit is ‘teaching assistants’. Different social contexts, age groups and pupil needs are merged, but the most significant source research was led by Peter Blatchford, who chose to speak back. His research, in fact, pointed to classroom assistants working in conditions where no time was given for guidance from the teacher or for evaluation afterwards. It complained of classroom assistants always being assigned to lower attainers, thus depriving these children of help from a qualified teacher. Blatchford was not suggesting that classroom assistants are ineffective, but pointing to ways in which they could bring greater benefit. We should also recognise that classroom assistants serve a range of purposes, not all of which are measured through attainment.

Clearly, placing classroom assistants near the bottom of the Toolkit’s league table, with a label of ‘low impact for high cost’, could result in schools and academy chains terminating their employment, especially in times of budget cuts. Given these problems, it is only by chance if aggregation brings sound results. Whilst some conclusions may be tactically appealing, for example the low ratings for government-approved practices such as performance pay and streaming/setting, it can be extremely misleading. Admittedly the Toolkit’s authors urge caution:

The evidence it contains is a supplement to rather than a substitute for professional judgement: it provides no guaranteed solutions or quick fixes. . . We think that average impact elsewhere will be useful to schools in making a good ‘bet’ on what might be valuable, or may strike a note of caution when trying out something which has not worked so well in the past. (Higgins et al., 2012)

However, many busy teachers and heads will inevitably take the league table at face value and remain unaware of its many problems" (p. 370).

No comments:

Post a Comment